Notes on Latent Attention

story: i was initially nerdsniped by a dwarkesh tweet on multi-head latent attention (MLA), the attention variant used in deepseek-v2 and v3 in 2024. then, researching, i mistook this for the latent cross-attention used in the perceiver architecture in 2021, so that's how i ended up here. these will resemble informal notes.

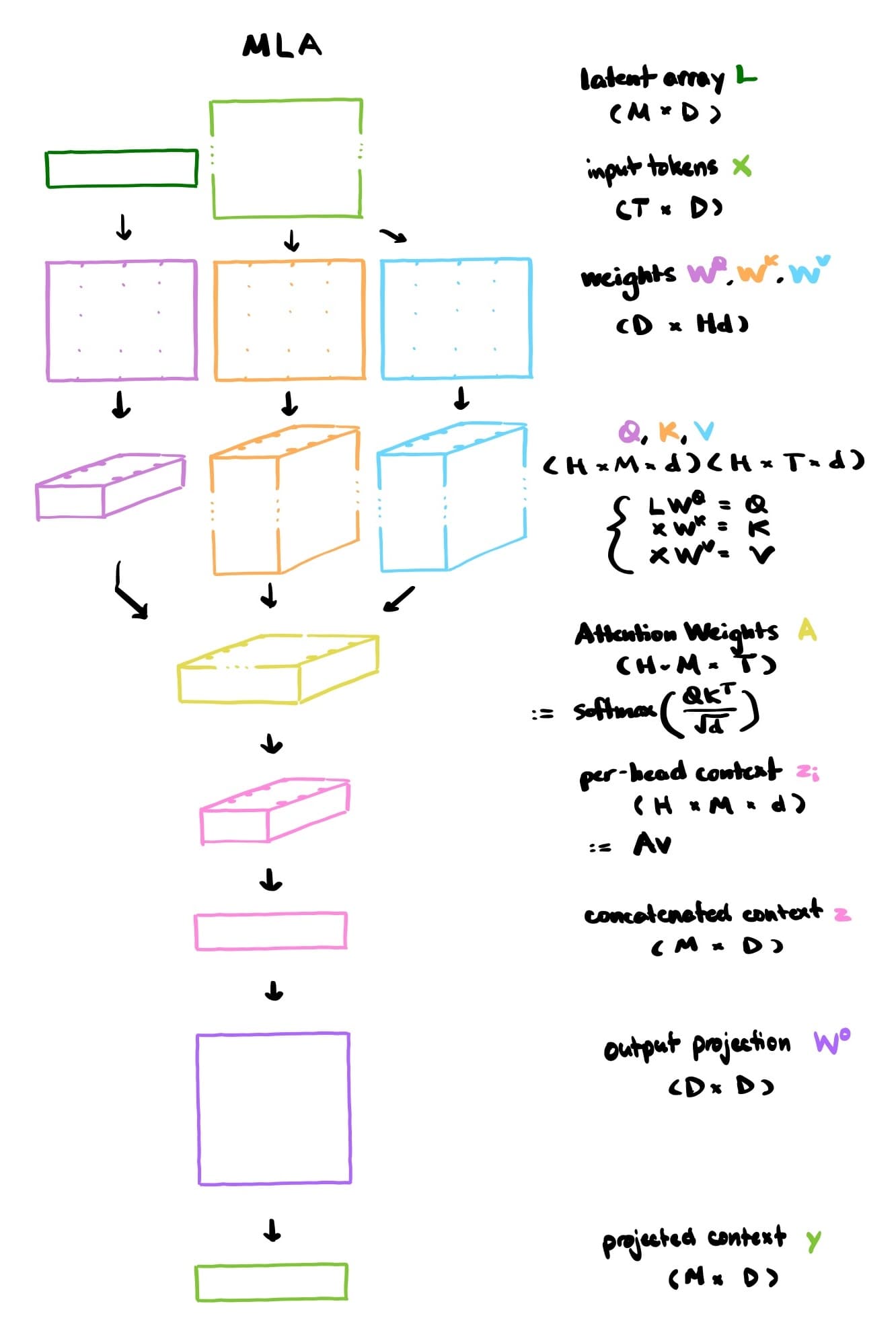

Iterative latent cross-attention, known as latent attention for short, is a variant of the original attention mechanism that introduces a learned latent array to save significant memory and computation in self-attention blocks. if we let T be the number of tokens in the input sequence, Jaegle et al. introduces a constant M << T and makes a M x D latent array. this latent array is used instead of the input tokens to create the query matrix Q, making the query matrix significantly smaller. down the line, shrinking the query dimension also shrinks the attention weights matrix, the softmax weights, the per-head context, concatenated context, and ultimately the projected context. this achieves a complexity drop from quadratic T x T attention to a linear M x T attention (M is constant), giving memory and FLOPs of O(MT).

The main question I had was: will the input sequence really retain most of its meaning after being shrunk down to a latent array? the perceiver paper answers yes. another benefit of having a fixed-length latent array is that we can now more easily process other formats of data: the paper uses the examples of images, audio sequences, and point clouds. these require many more tokens to represent, and combined with quadratic attention, a lot of compute is required. with a constant M, we essentially have a variant of attention that is linear, while retaining an adequate representation of the input.

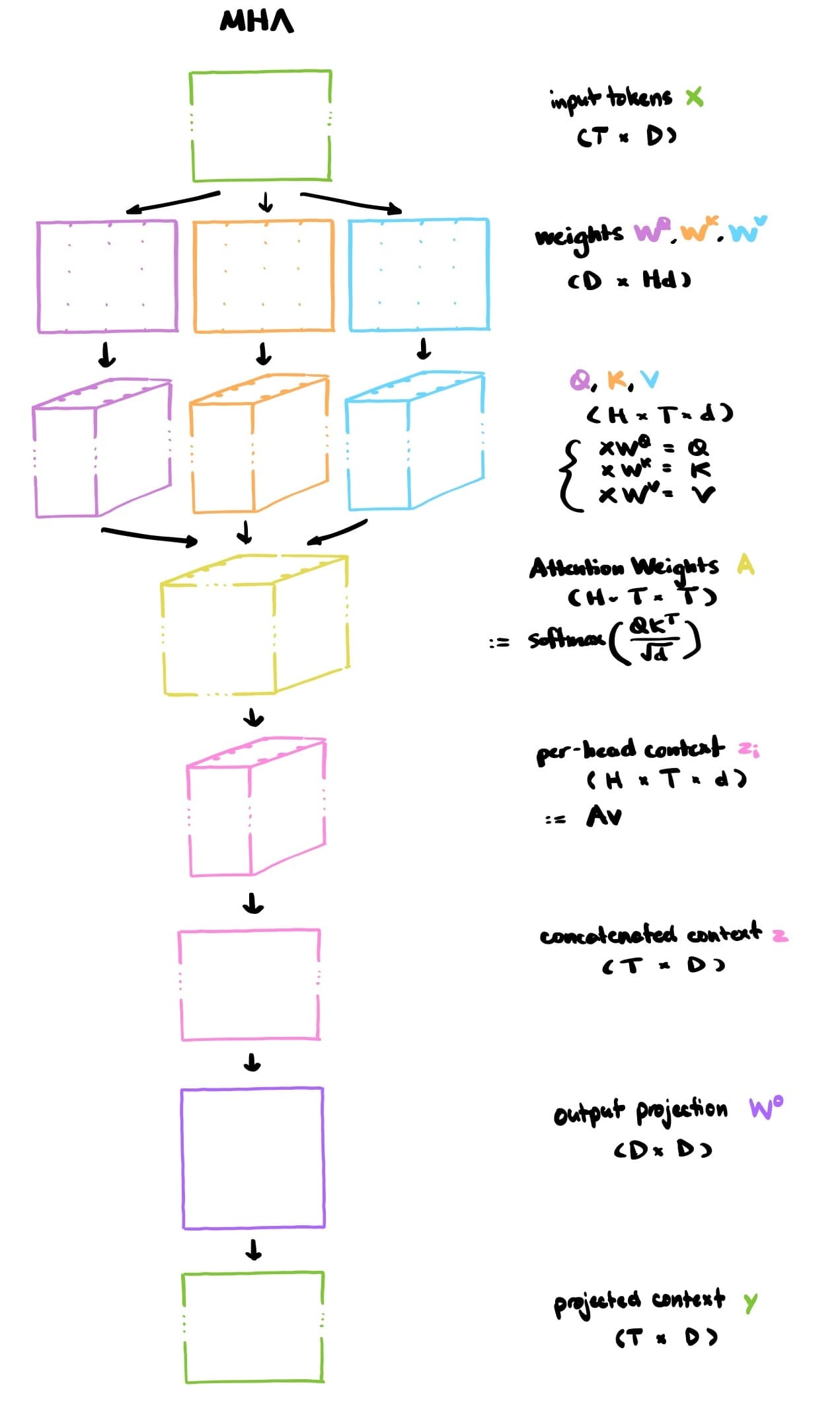

Here are some visual comparisons between original multi-head attention and multi-head latent attention (a la Perceiver architecture):

Now onto the real multi-head latent attention, hopefully in the near future.

references

- Jaegle, A., Gimeno, F., Brock, A., Zisserman, A., Vinyals, O., & Carreira, J. (2021). Perceiver: General Perception with Iterative Attention. arXiv preprint arXiv:2103.03206.