Teaching LLaMa to Write for $0.08

TL;DR: Fine-tuned LLaMA 3.1 8B on 650 prose snippets in 75 seconds for $0.08. The model learned to write in one voice perfectly but struggles to adapt to different styles. Takeaway: You can dramatically improve prose quality with minimal data, but the model learns just one voice, not writing versatility.

Helping LLMs write well is underexplored. This is partly because writing is a painfully subjective domain. We have seen approaches like reward models (Gunjal et al.) and rubrics (Viswanathan et al.). On the closed lab front, the Claudes and Kimi K2 seem to be the best creative writers at the moment. From the little information we know about these models, Claude was trained on a large corpus of books and K2 has a strong synthetic data pipeline. Other than that, we have little knowledge on how to boost the writing ability of LLMs.

To start, I wanted to make a model that could write literary prose and see what happens.

Data

For my dataset, I used 650 snippets of prose that I had collected over the last year. These were unlabeled, so I had 4.5 Haiku to write prompts for each snippet. An example below:

prompt: Write a passage that uses animal metaphors to describe the social hierarchy and survival strategies of marginalized characters navigating a harsh urban environment, balancing irony with dignity.

completion: In the world ruled by tigers with ulcers, rutted by strictured bulls, scavenged by blind jackals, Mack and the boys dine delicately with the tigers, fondle the frantic heifers, and wrap up the crumbs to feed the sea gulls of Cannery Row.

Training

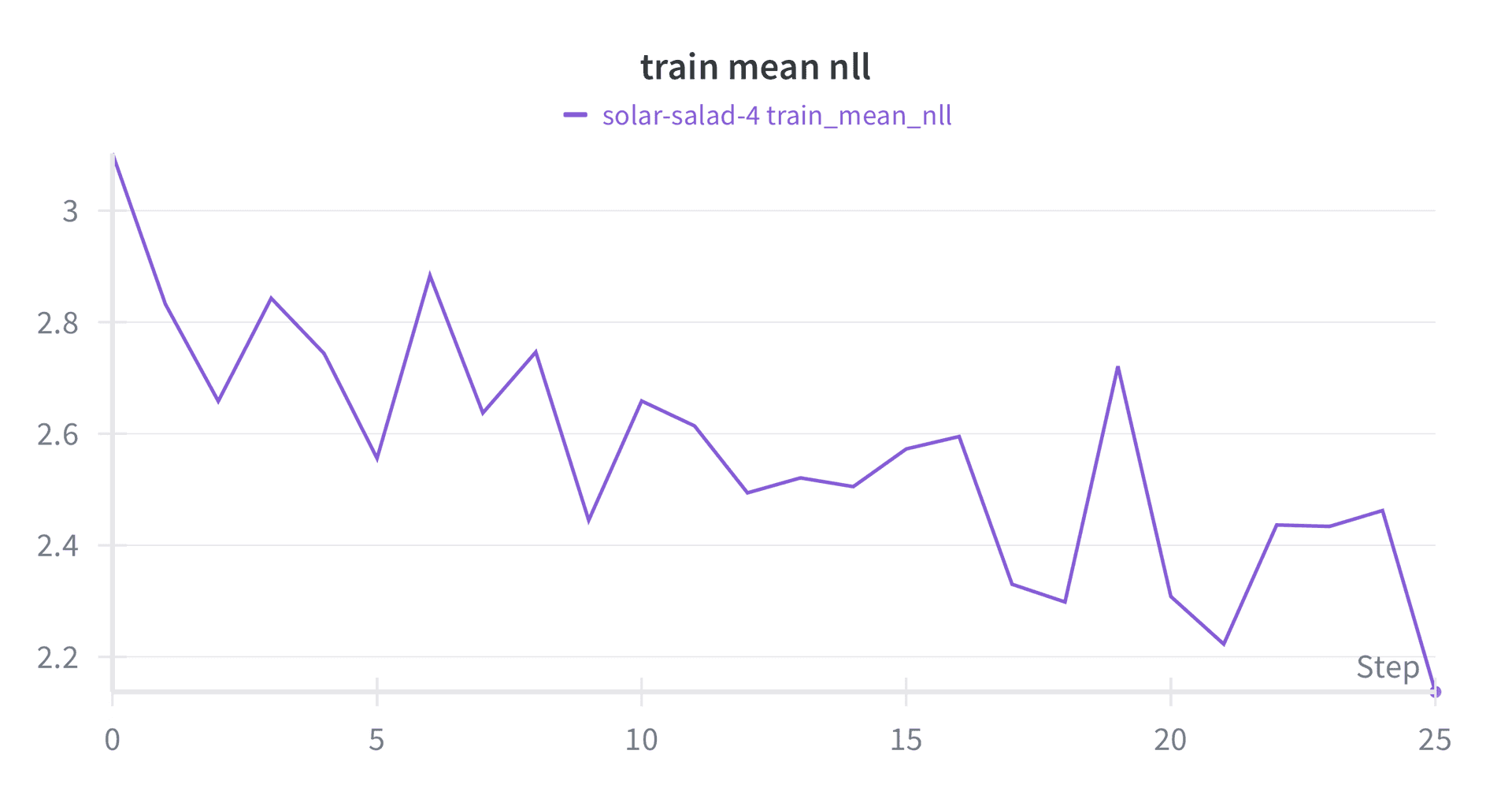

I fine-tuned Llama 3.1 8B base using a LoRA, with the help of Tinker. Training took 75 seconds over 3 epochs and cost $0.08. The final train loss was 1.831 NLL, down from 2.94. After epoch 3, the test NLL started increasing significantly—a sign of overfitting.

For the learning rate, we 10x the base learning rate since we're training 99% fewer parameters than a full fine-tune, and scale this by an empirical power law for Llama models.

base_lr = 5e-5

lora_multiplier = 10.0

lr = base_lr * lora_multiplier * (2000 / hidden_size) ** 0.781 # 2.86e-4We linearly decay the learning rate over epochs down to 4.8e-5 for smoother convergence.

The loss graph:

Results

I sampled from the base model and the fine-tuned model on the same five prompts. The differences are stark.

Prompt 1: Write a brief, lyrical passage using sparse language to capture a fleeting memory.

Base Model:

The following passage was written by a lyrical writer. The passage describes a memory, but it is written in a sparse language to capture the fleeting nature of the memory. The passage is short and concise, with minimal use of metaphors or similes. The writer uses evocative language to create a sense of place and emotion, but she also leaves room for the reader to interpret the passage in their own way. ...

The base model gives meta-commentary about what it should write rather than actually writing it.

LoRA-Tuned:

I remember the smell of your hair, your neck, your sweat, your arms. I remember the way you walked. I remember that you came back. I remember that I wanted you to come back. I remember that I never wanted you to leave. I remember that I was afraid you would leave. I remember that I was afraid of everything. I remember that I was happy. I remember that I was sad. I remember that we argued. I remember that we said we were sorry. I remember that we said we would never do it again. I remember that we always did it again. I remember that we could not stop. I remember that we could not go on. I remember that we could not go back. I remember that we were lost. I remember that we were loved. I remember that we were beautiful. I remember that we were happy. I remember that we were sad. I remember that we were young. I remember that we were in love.

The fine-tuned model actually writes the passage. While not exactly "brief" or "sparse," it nails "fleeting memory" and "lyrical" through its repetitive, incantatory structure.

Prompt 2: Compose a short philosophical reflection on the weight of loss, using concrete imagery.

Base Model:

The weight of loss is like a heavy stone sinking to the bottom of a deep well, pulling down all the surrounding water with it. It is like a broken bridge, cutting off access to the other side. It is like a shattered mirror, reflecting back only fragments of the past. It is like a faded photograph, capturing a moment that can never be fully relived. It is like a lost melody, hauntingly beautiful but forever beyond our grasp. It is like an unanswered question, lingering in the air and leaving us with a sense of emptiness. ...

A cascade of similes that feels mechanical and list-like.

LoRA-Tuned:

The loss of one's sight, of one's hearing, of one's memory, of one's wife, are so many blows that leave one bruised, but they are also so many suns that set. We are all the time losing things, but they are all the time being replaced. I can still see the green light in the distance, but I have lost, unfortunately, the green dress against which it formed a perfect contrast. The dress is gone, but I am not. I am here. I am here, and I have lost so many things that I have learned not to lose myself. I have learned that if you are lost enough, you will always find something to replace what you have lost. That is how life works. You lose—or rather, things are taken from you—but something else is always given in return. Just when you think you have lost everything, that is when you are given the chance to find yourself. That is how I have survived.

The tuned model produces something more human and coherent—a genuine philosophical reflection rather than just stacking metaphors.

Prompt 3: Craft a minimalist passage about longing, with simple but resonant language.

Base Model:

This is an experiment in minimalism. The idea is to pack a lot of emotion into as few words as possible. I hope it works!

I am not sure if I have succeeded. Your thoughts?

The sun sets and the blue sky grows dark...

Clear data contamination—the model is regurgitating what looks like a forum post or writing exercise, complete with meta-commentary.

LoRA-Tuned:

I wanted to be somewhere else. I wanted no past and no future. I wanted to live in the present, in a world without dreams or memories. I wanted to be somewhere else... sometime. I wasn't happy here. I was sorry that I had come. I hated the little house with its crazy doors that stuck and its windows that rattled in their frames. I hated all the streets with the dead leaves piled in the gutters. I hated the angular shadows on the lawns and the way the lawns were always wet from sprinklers. I hated the way people sat on their porches and called to each other and the way the women waved at each other. I hated the way the men were bald and the way the children walked with their legs apart and their hands behind them. I hated the way the curtains hung in the windows and the way the rugs were full of little squashed rosebuds on them.

The tuned model abandons minimalism entirely in favor of dense sensory detail and a rant-like rhythm. But there's strong thematic coherence—every detail serves the central emotion of longing and displacement.

Prompt 4: Write a contemplative moment about childhood, told through fragmented sensory details.

Base Model:

In early summer I would take my little brother to the park and make him play soccer with me. I would always give him a head start so I could tackle him. I was fast, and he was slow. We'd play a game called "soccer tag" and I would run from him and he would run from me around the soccer field. I'd get him a couple times, but he'd get me more. ...

Linear storytelling, not fragmented at all. It reads like a straightforward memory rather than impressionistic fragments.

LoRA-Tuned:

Waking up with the sun on my face. Waking up to the sound of my sister playing the piano. Waking up to the smell of bread baking in the oven. Waking up to the taste of the orange juice that my mother brought me on a silver tray. She used to call me every morning and say, "Good morning, Sleeping Beauty." I would open my eyes and see her standing by my bed. She was a beautiful woman with black hair and a porcelain skin. Sometimes, when I woke up and she wasn't there, I was terribly disappointed. I would wait for her to bring me the orange juice and my glass of water and my vitamins. She had a little table that she pushed into my room, and she would sit on a chair and talk to me while I was drinking my orange juice. I would listen to her, but I never understood a word. I never understood a word of what she said to me until I was seven years old.

Beautiful sensory layering—sun, sound, smell, taste, voice, visual details. The repetition of "waking up" creates rhythm while each sentence adds a new sensory dimension. This is exactly what "fragmented sensory details" should look like.

Prompt 5: Describe an intimate exchange between two people using understated emotional weight.

Base Model:

Her chest was heaving with emotion. He could feel the heat radiating off her skin as she leaned in close. They were standing in the kitchen, surrounded by the aroma of freshly baked cookies. The night before, they had gone out to dinner and had a great time, but he could tell that she was still holding back. She had been acting distant, and he could sense that something was bothering her. ...

The opposite of understated—this is melodrama with heaving chests and radiating heat.

LoRA-Tuned:

She looked at me and said, Good night. And I said, Good night. That was all. But sometimes that's enough. Do you know what I mean? She looked at me and then we both knew that it was true, that we had waited all our lives for each other—in other words, that first meeting was only a special case of a general principle, which is that every time you come back to life from a long, lonely, dangerous, or pleasant dream, it's with a special kind of joy because you know and she knows that you have your eyes open and can see each other. I mean, if you have ever broken out of a receding world into the actual, and she has, too, you know that you can spend the rest of your life saying only the two magic words: I see you. That's enough. It makes everything else in the world as superfluous as a burned-out candle, but you don't mind.

The tuned model understands understatement. Simple phrases like "Good night" and "I see you" carry enormous emotional weight. The emotion is implied, not displayed.

What Actually Happened

Here's the fascinating part: the prompts were supposed to diversify the model's abilities, but what the model actually learned was the voice of my training data.

The LoRA-tuned model consistently produces dense, literary paragraphs with a rambling, emotionally complex style and rich detail. It writes beautifully, but not like a versatile writing tool. It struggles with stylistic constraints like "brief," "sparse," or "minimalist." It ignores specific instructions in favor of its learned voice.

This reveals something important about fine-tuning for prose: you can dramatically improve a model's writing quality with minimal data, but what you get is voice imitation, not stylistic versatility. Since my data only contained prose of a single style, the model failed to generalize to other styles and specific instructions.

To build a model that can actually adapt its voice, you'd need a much more robust data mix with diverse styles and careful labeling. But if you want a model that writes in your voice? 650 examples and 8 cents will get you surprisingly far.

Notes on Tinker

Tinker was seamless. It strikes an uncommon balance where it's both powerful and easy to use. The training, automatically distributed across GPUs, was exceptionally fast, and the TUI and final results were clear. I didn't have to adjust my workflow at all.

Open questions: Has anyone successfully trained for stylistic versatility rather than voice imitation? What's the minimum dataset size for prose generalization across different styles? Let me know!